Projects

Research Projects

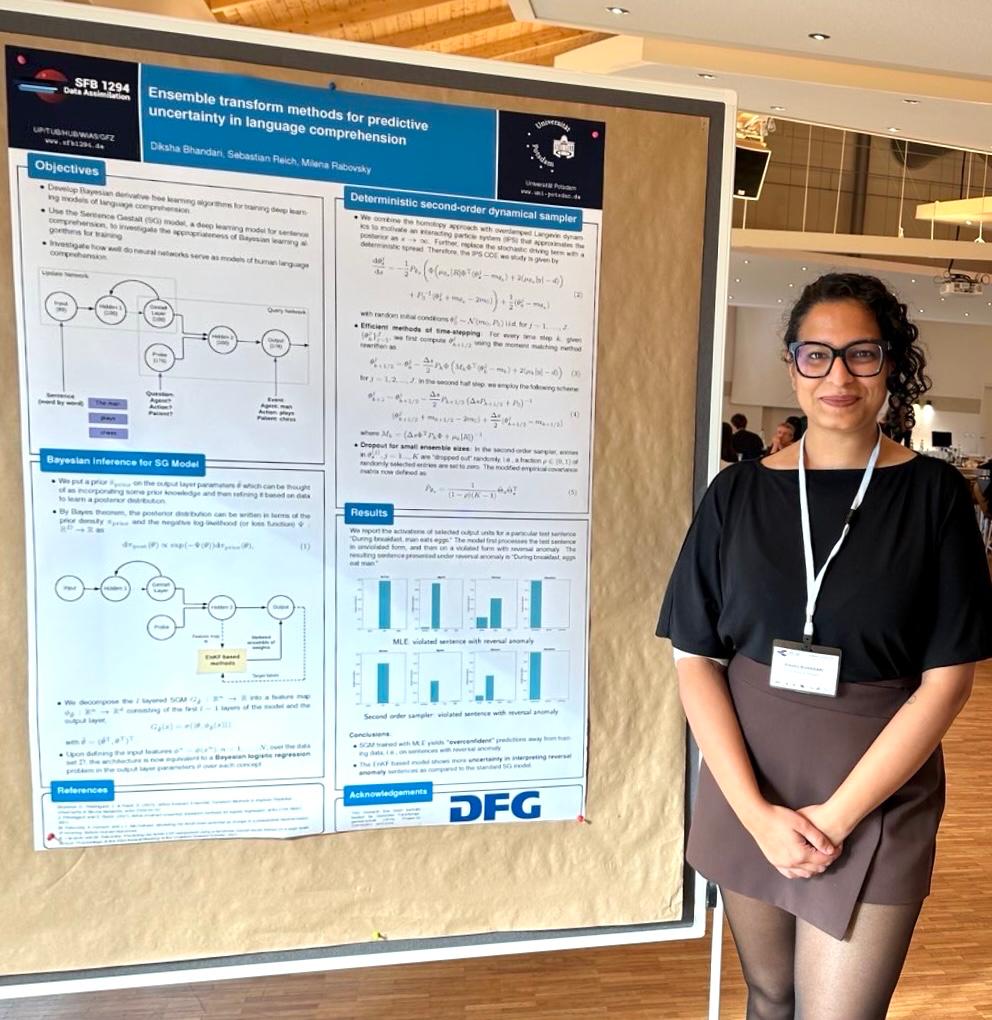

Ensemble transform methods for neural network uncertainty

We propose ensemble transform methods that improve predictive uncertainty in neural networks. These methods are affine-invariant and applicable to any pre-trained network. The work uses ensemble transform Kalman filter-based MCMC methods for efficient posterior sampling and supports robustness against model misspecification.

Bayesian inference of human language comprehension

Applied ensemble Kalman methods to simulate and infer uncertainty in the Sentence Gestalt model, a neural network model of human language comprehension. We used a scoring-rule-based Bayesian formulation to handle model misfit and explored inference robustness in probabilistic psycholinguistic models.

Likelihood-free Bayesian inference in generative models

Developed novel sampling algorithms combining kernel-based scoring rules with ensemble transform MCMC samplers for high-dimensional generative models. Applications include dynamical systems, such as the stochastic Lorenz-96 model.

Ongoing & Miscellaneous

- to be added